Senior manager in tech: AI puts full stack engineering jobs at risk, others not so much

Abstract

Despite the rise of the LLM’s, most (but not all) software engineering jobs are not at risk. Full stack engineers working on non-critical systems are at risk, while any usage of AI in a complex and/or critical system is generally a bad idea and not a risk. Until modern AI solutions can reliably incorporate symbolic logic, execute logical flows and procedures reliably, and are able to explain their reasoning, any commercially valuable engineering task will be first and foremost undertaken by a human and not a machine. For now, AI solutions for these tasks will still have a copilot role in a limited context. At the same time, the increase in productivity from AI will create a new baseline requirement for most engineers: being able to use an AI copilot is the new norm.

Introduction

Every other article at the beginning of 2025 had headlines like “The software engineer’s job is dead“ or “When will AI replace software engineers“. They aim to provoke fear, and very few have the industry knowledge or the data to back their claims. The opposing camp believes that “AI will never replace a software engineer’s job; it’s all hype“ and “AI will never be good enough to replace a human“.

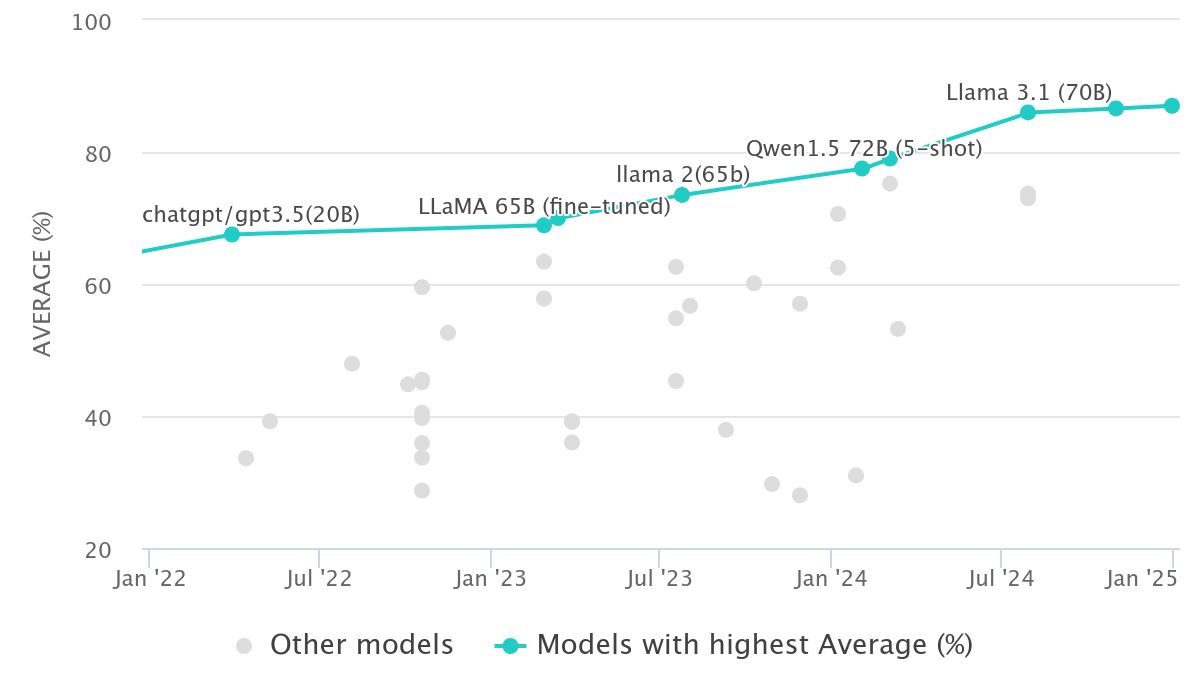

Let’s face it: both are just speculation. We, humans, are not good at predicting non-linear development, so we can’t know for sure what the long-lasting impact will be when the hype is over, but we see evidence that the models are getting better, and agents show promise.

Given the model development over the past 3 years, it’s easy to say: they will get better. With the introduction of agents, the engineering copilot solutions can design a system, code it up, and deploy the resources to the cloud. For now, most such vibe-coded solutions result in security breaches and oftentimes sub-optimal solutions.

What will happen next? They will improve, and the code they generate will reach okay code quality. It is only reasonable to expect that.

Will AI models soon be able to design, implement, and roll out a complex change on an existing microservices architecture and ensure it is released to production without issues? Probably not.

Will AI models soon be able to develop simple apps reliably from spec to production? Probably yes.

What does it mean for an existing or an aspiring software engineer? Should we be worried?

The table below depicts what I think are the system complexity and criticality considerations when using AI. Long story short: any business-critical system, especially if it is related to defense, finance, medicine, or governmental services is at this time a long way from being replaced by AI.

As an engineering leader, I will avoid using AI in any critical system. For medium-critical systems, AI will be a great copilot. As for non-critical systems, prototyping, and proof of concept types of tasks, AI will take over those to a large extent.

| System complexity / System criticality | Low criticality | Medium criticality | High criticality |

|---|---|---|---|

| Low complexity | AI will take over 95% of the tasks very soon | AI will be a copilot, helping with repetitive tasks | AI usage is probably a bad idea |

| Medium complexity | AI as a copilot, helping with repetitive tasks on limited parts of the system | AI as a copilot, helping with repetitive tasks on limited parts of the system | AI usage is probably a bad idea |

| High complexity | AI will be a copilot, helping with repetitive tasks | AI will be a copilot, helping with repetitive tasks | AI usage is probably a bad idea |

Table 1: AI impact based on system complexity and system criticality. Criticality is defined by the system’s use, role, and impact. Examples of critical systems are: software used in military and medical equipment, processing of financial transactions, processing of sensitive data, or having a central role for a medium to large-sized company.

What are the likely impacts on software engineers then?

All low-complexity software engineering tasks will become a commodity. Medium-complexity tasks are at risk too.

In 5 years from now, software engineers will become more specialized. Already now, many full-stack engineers are at risk.Senior engineers often solve hard, vaguely defined, not very well-described problems. Their main job is to create business value using software. For now, AI is far from being more than a very convenient search and information-gathering tool for such tasks.

AI does not understand business value.Given the increase in productivity, the output of the majority of software engineers will rise, and the focus will shift on creating business value, rather than solving mundane problems.

On the one hand, if 4 engineers can do the job of 5 engineers, why not keep just 4? From a very simplistic point of view, it even makes sense, but since most companies have competition, there is little reason to lose it by hindering the company’s growth. In my opinion, an increase in productivity will result in more intense competition. If you have 40 engineers, and your competitor has 50, all assisted by AI, who will likely deliver more features and have a more attractive position on the market? (all else being equal)What about junior engineers? Who will need them?

Given the current state of the market, I don’t think much will change. Senior engineers do not appear out of nowhere. My advice to junior engineers is to embrace the productivity boosts that are possible with AI, but also make sure that the path from junior to mid is shortened. Your main job at the beginning could be making sure that the code generated by AI is good enough, so the barrier to entry to get your first job can be higher than before. The GenAI boom can be a curse because if you rely on AI too much, you might never become a good enough engineer on your own.

A note on the future

Modern LLMs operate based on probabilities (weights), not on symbolic logic. The core of any modern neural network is generally a large regression model with lots of bells and whistles on top (attention, transformers, different activation functions, etc). If you tell an LLM or an agent to perform a sequence of logical operations, there is no guarantee that it will be done exactly as you expect. This is the alignment problem: even if the user’s intention is to perform a simple procedure with a couple of IF statements, an LLM will try generating the output that satisfies the user’s request, not the user’s intention. The result can be a completely different thing. That’s why hallucinations exist, as LLMs generate the most plausible output, but that output is rarely coherent when complex.

Until AI solutions will be able to reliably follow a procedure with logic, most software engineer jobs are safe.